Yesterday, we looked at who gets polled. Click here if you missed it. If the wrong universe is selected, the results cannot be accurate. So let’s look at bias.

Polling companies are, more often than not, made up of humans. There are some AI-based polls, but trust me, they’re not ready for prime time. All humans have their biases. This shows up in how questions are asked, and what the answer choices are. How things are framed can greatly influence what numbers are generated.

Here’s an example. (In all cases, a good pollster would randomize the order of the names)

Option 1: If the election were held today, for whom would you vote?

Notice the difference between Option Choice 1A, just listing the two leaders, and then in Option Choice 1B listing them along with “Other” and “Wouldn’t Vote”. Option Choice 1A forces people to make a decision. In live polling, the response might be listed as “refused” if the person couldn’t make a choice, or they might just pick one, which may or may not be valid. By including “Other” and “Wouldn’t Vote” the results will be more accurate and could potentially change the outcome as it relates to the two leaders.

Adding in “Other” or “Unsure” creates a more accurate poll because there really are people who will write in someone who isn’t running, or they are actually still considering their options. John Bolton, for example, will be writing in Dick Cheyney. Not joking. Source. As an aside, Bolton lives in Bethesda, so his vote won’t matter. Sorry, I digress.

In addition, as in Option Choice 1C, adding in the names of third party and independent candidates always generates different topline numbers. For example, RFK takes between 2% and 17% (in total) of voters from President Biden and Convicted Felon Trump. In some cases, that benefits President Biden, in other cases it benefits Convicted Felon Trump, and in still other cases votes are taken from both candidates in a close to equal amounts.

Why does this matter, since no third party or independent candidate has ever won an election? In recent history, Ross Perot was a highly successful independent candidate in 1992, garnering 18.9% of the popular vote, while carrying no states in the Electoral College. As it turns out, his voters were drawn from both Bill Clinton and George Bush but had his 20% come from one or the other, it could have changed the outcome even without winning any state. As an aside, early polling indicated that Perot was leading both Clinton and Bush. Source.

The bias of a pollster is shown in these choices – if they believe that only Trump or President Biden will win, they might just use Option Choice 1A, missing valuable data. That additional data is critical because it helps the campaigns in overall analysis, and informs those of us who will go out canvassing or talking to voters in other venues.

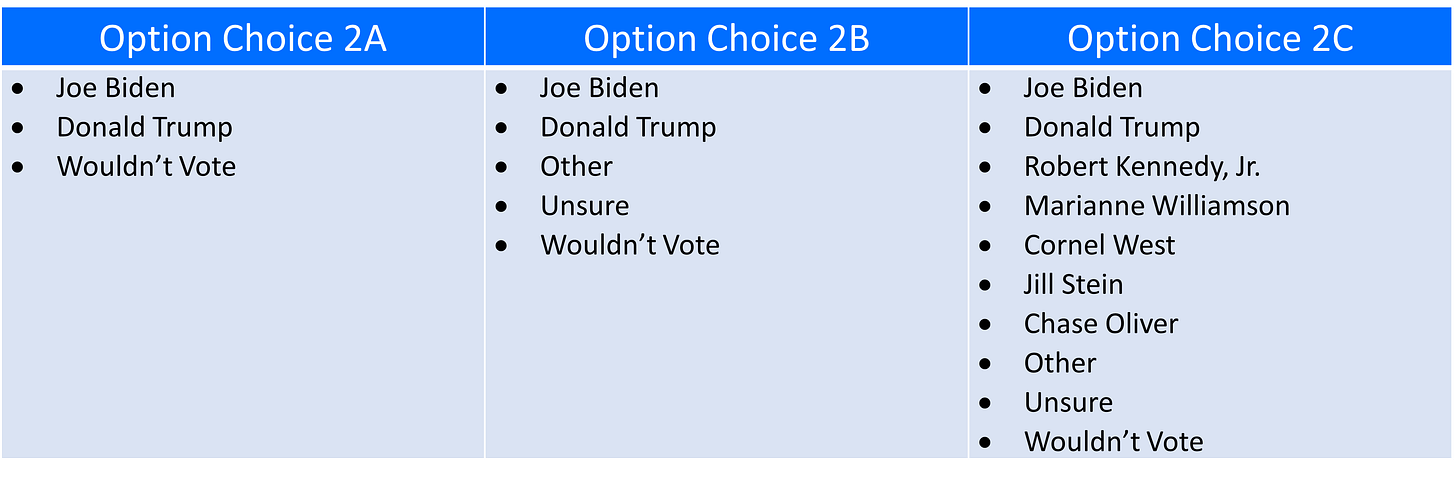

Option 2:

For whom will you vote in November?

Now let’s change the time from “if the election were held today” to “November”. There are people who would make a certain choice if the election were held today but might be on the fence about November dependent on things that happen between now and then. Focus groups in Michigan right before their 2024 primary, for example, indicated that some people who voted for President Biden in 2020 were on the fence at the time, but may well vote for him in November depending on how things are in the Middle East between the Primary and the General. (13.2% of those people voted “uncommitted” in the primary, which was below what multiple polls showed.) Therefore, a poll with “if the election were held today” might overstate or understate President Biden’s chances compared to November.

The point is that HOW the questions are phrased, and which response set is utilized, generates different responses and reflect the bias of the individual pollster. One caveat: some polls are undertaken at the behest of an organization, and then the pollster often “has help” in the development of the questions asked.

This can lead to push polls, which are polls where the “pollster” is not looking for legitimate responses, but looking to push people towards a certain candidate.

I have a friend who is a political non-combatant. She was push-polled in 2012 when the candidates were President Obama and Mitt Romney. She knew she was going to vote for President Obama’s re-election but went along once she realized it was a push poll.

The conversation went something like this:

Q: Who are you voting for in November? (Choices were provided)

A: President Obama

Q: Would it make you more likely or less likely to vote for Obama if you knew he was not an American, but a Kenyan, and was also a pedophile?

A: More likely

The “pollster” hung up.

And yet, that ended up being part of a published poll in 2012.

Talk about bias!

Many (if not most) pollsters want to get accurate information. But a review of pollsters based on how they model, the number of polls they undertake, and their poll results compared to the outcome of the next election yields that there are better pollsters and worse pollsters. 538 has been rating pollsters since Poblano admitted that he was really Nate Silver, and began publishing under the 538 moniker in 2008. You can see their ratings, and their methodology, here. As an aside, there is not a statistical difference between the top 19 pollsters on the 538 list, all are considered very good.

So, let’s say that you are looking from a poll from a good pollster, and they picked the best possible universe, and framed their questions optimally. There is still one more piece to the puzzle, which involves adjustments and weightings, which will be covered in tomorrow’s post.

Thanks for reading. Please leave any comments or questions you might have, and if you appreciated this information, please tell your friends!